When you begin to build anything related to data, you have to get a clear understanding about the question(s) you want to get solved, and the data you want to use to “ask” to find an answer. What sounds like a banality is indeed an essential technique of breaking down questions on the one hand, understanding your data sources on the other hand, so that you eventually get to questions, data and granularity that matches so that you can finally start building.

Let’s do this for my coffee dashboard. What I am interested in is:

- how many cups of hot beverages do we consume every day?

- is there any impact of the day in the week or the hour of the day?

- is there any change over time?

- Which products does or team choose? Does time have any relevance here?

- How many cups of hot beverages does each employee consume per day?

Let’s look at the data side now. One data source is our Franke A400 – a professional coffee maker that runs off an embedded Linux system. It has two (hidden) USB ports that you can use to deploy or retrieve data, including the product listing, UI tweaking and consumption details. I ran an export and the results are quite promising:

In the XML file there are nodes for each event that moved something in the machine. The nodes contain information on date and time, product consumed and a lot of other details, most of them rather unintelligible due to cryptic naming’. Have a look at the XML to understand the importance of proper naming AND/OR get a good idea of a noneffective “security” strategy called obfuscation (which proposes that things are hidden away properly if only the purpose of the data is obscured as much as possible).

<En

Uid="uid-533"

Ti="2019-07-25T15:50:11"

TiUtc="2019-07-25T13:50:11"

Ist="0"

Isu="1"

Re="12"

Pp="45"

Io="222"

Na="Milchkaffee"

X="Small"

Pl="5"

Ib="798"

Rt="279"

Bt="67"

Wc="0"

Min="0"

Max="0"

Mav="0"

Fl="0"

Ms="80"

Tf="167"

Bam="110"

Bpt="10"

Guid="d9b26925-8a90-425f-8f0f-c651402d38f5"

Ver="1.0.1">

<Miv>0</Miv>

<Miv>0</Miv>

<Miv>0</Miv>

<Miv>0</Miv>

<Miv>0</Miv>

<Dd>25</Dd>

<Dd>0</Dd>

<Tb>94</Tb>

<Tb>91</Tb>

<Tb>93</Tb>

<Tl>0</Tl>

<Tl>0</Tl>

<Tl>255</Tl>

<Tl>255</Tl>

<Tl>0</Tl>

<Bi>1234</Bi>

<Bi>1234</Bi>

</En>We’ll discuss the data later, but one thing that’s obvious from just one record: we don’t get information on the person that just had a cup of coffee, nor do we have any number of users that are potentially eligible to use the machine. Who’s on vacation? Who’s not? And how many guests were in the building? Long story short: we can’t answer these questions from the machine data straightaway, so we have two options (and guess what: we’re going to choose them sequentially, which isn’t uncommon in software projects):

- We defer the question as it can’t be answered with the data we got. This is always a tradeoff, and for a real project, you have to find a way to get good balance. The set of questions a customer has defined the budget you get, and the expected ROI might get out of reach if you’re trimming too rigidly. On the other hand, if you promise results that are not grounded on data that you have access to, there will be unpleasant discussions later.

- We have to bring in additional data – that’s pretty obvious, but how do we bring the data together?

For our case, option #1 is the way to go – knowing the actual number of employees and guests is just a fancy addition, so we can keep that for later.

Back to our example. There are a number of issues:

- Cryptic field names – you should have seen this immediately. There’s not much we can do about it, but Einstein Analytics will help us to see the data in context later.

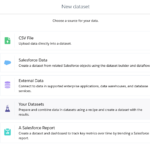

- XML! Now that’s bad luck, because Einstein Analytics doesn’t list XML as an upload format:

To get our data into analytics, we have to take another step, and that is parsing the XML file into a CSV format. There are a few options, and – being a lazy person – I chose the one that is most comfortable for me.

For the sake of completeness, if the questions comes up frequently, you should consider using XSLT to write a transformation template. XSL means Extensible Stylesheet Language, and T is for Transformation. And XSLT file explains how to “translate” one XML source into another structured or semi-structured file. Unfortunately, my XSLT skills are zero, so I postponed XSLT for the time being (maybe another day and another blogpost) and turned to PHP (the first programming language i kind-of-learned. Technically, it’s possible to load the data files to a Salesforce org, read them with Apex and process the data into Salesforce objects. But PHP happens to run locally on my machine and reduces the hazzle to create objects and code in Salesforce. So here’s the core of my code…

/**

* $files has been dynamically filled with all log files in the directory...

*//

foreach ($files as $file) {

$xml=simplexml_load_file($file) or die("Error: Cannot create object");

//excuse me for using a clumsy hardcoded way to iterate over the attributes...

foreach ($xml->En as $Entity) {

echo $file.",";

echo $Entity['Uid'].",";

echo $Entity['Ti'].",";

echo $Entity['TiUtc'].",";

echo $Entity['Ist'].",";

echo $Entity['Isu'].",";

echo $Entity['Re'].",";

echo $Entity['Pp'].",";

echo $Entity['Io'].",";

echo $Entity['Na'].",";

echo $Entity['X'].",";

echo $Entity['Pl'].",";

echo $Entity['Bt'].",";

echo $Entity['Wc'].",";

echo $Entity['Min'].",";

echo $Entity['Max'].",";

echo $Entity['Mav'].",";

echo $Entity['Fl'].",";

echo $Entity['Ms'].",";

echo $Entity['Tf'].",";

echo $Entity['Bam'].",";

echo $Entity['Bpt'].",";

echo $Entity['Guid'].",";

echo $Entity['Ver'].",";

// and a number of values are attached as child nodes to our parent node...

foreach ($Entity->children() as $child) {

echo $child.",";

}

echo "\n";

}

}If we fire away a script with this snippet and pipe the output to a file, we’ll get a handy csv:

php parsexml.php > datafile.csvLet’s load this into Einstein Analytics to explore what’s in the data we just loaded:

Turns out: The data types are correct, but the data is still almost incomprehensible. Luckily enough, Einstein Analytics allows us to deal with this… Read more in the next blog post.